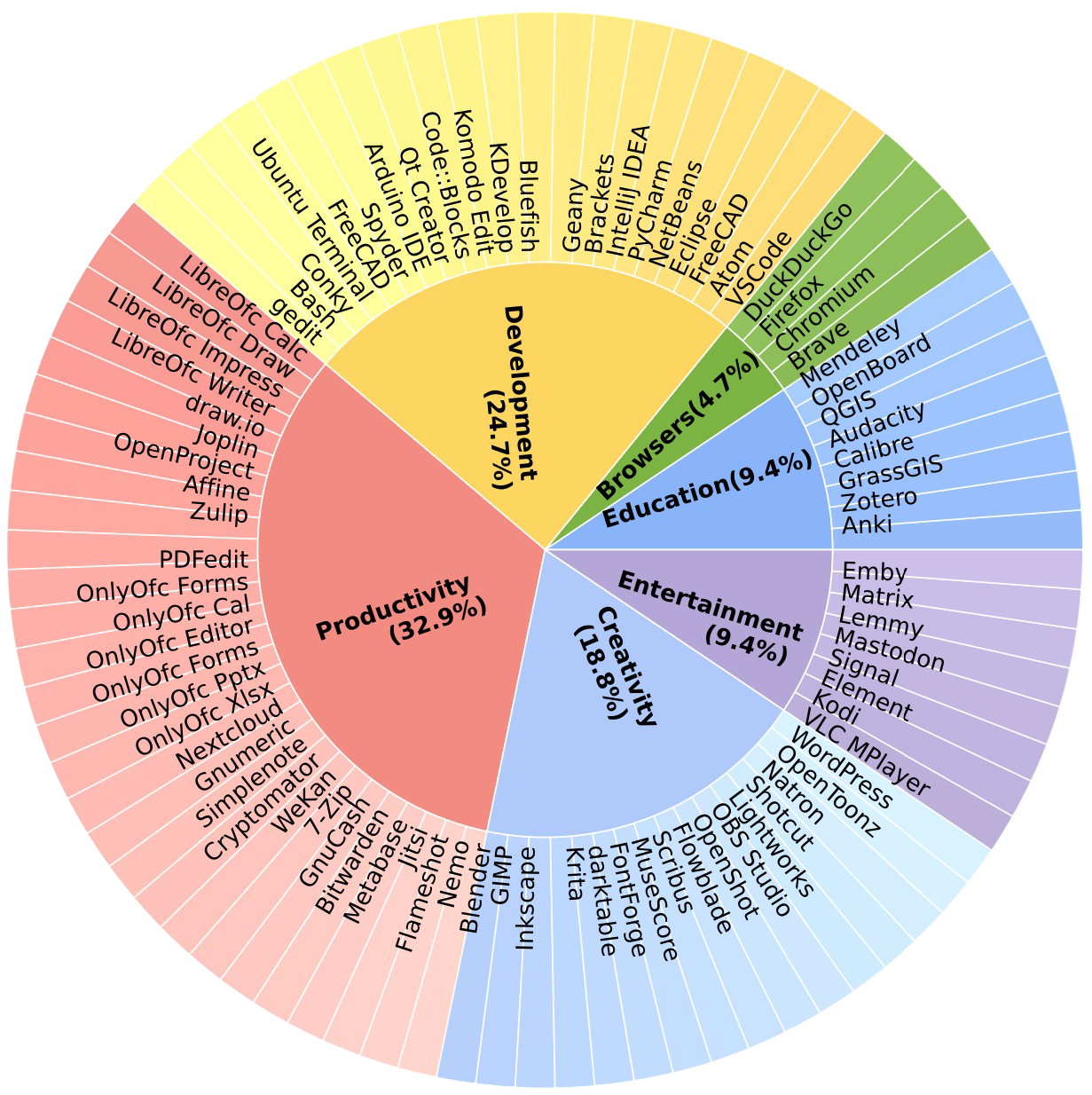

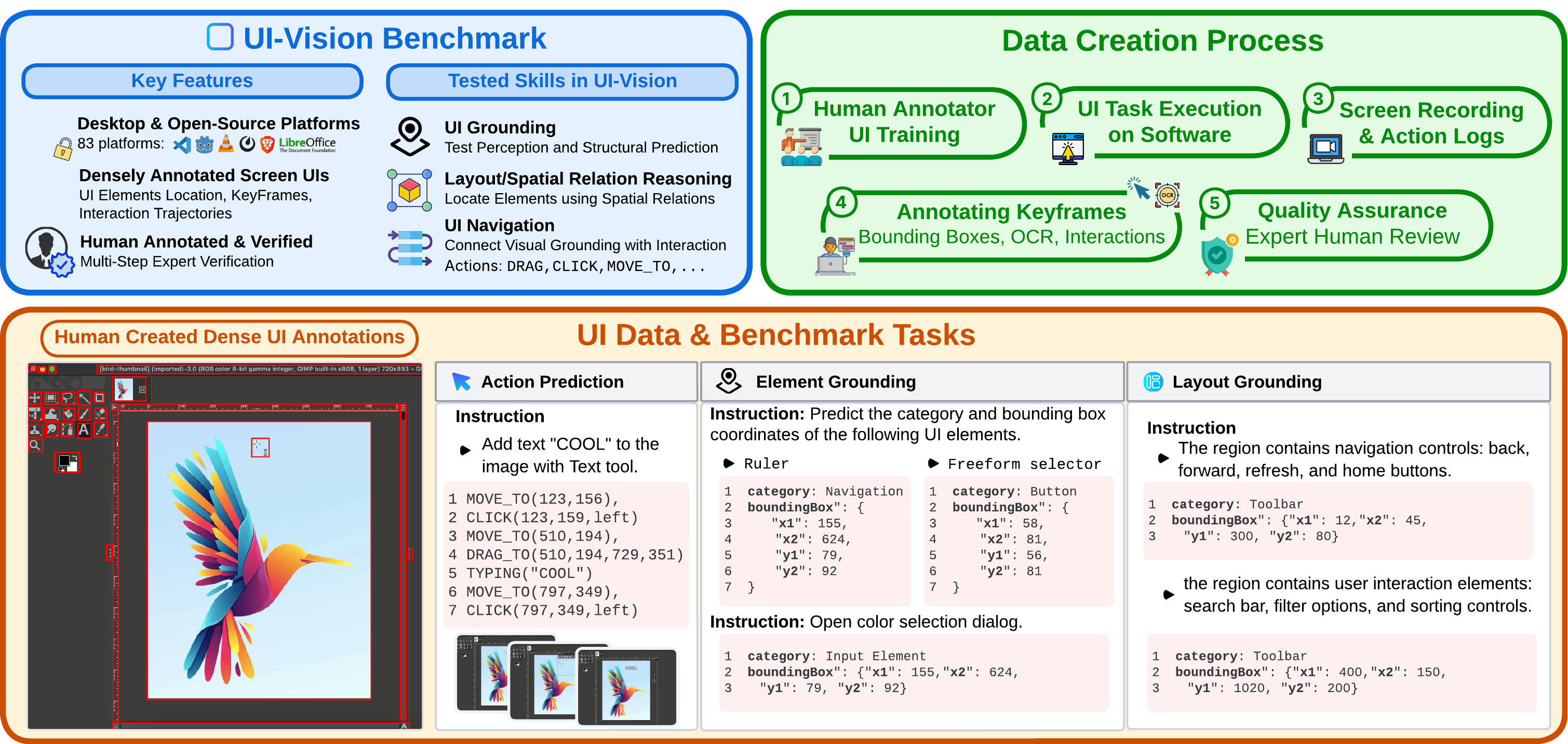

UI-Vision

Core Capabilities

Task Examples

Action Space

| Action | Description |

|---|---|

| Move(x, y) | Move the mouse to the specified coordinates. |

| Click(x, y, button) | Click the specified button at the given coordinates. |

| Typing('Hello') | Types a specified string. |

| Hotkey('ctrl', 'c') | Performs individual or combination hotkeys. |

| Drag([x1,y1], [x2,y2]) | Drags the mouse from start (x1,y1) to end (x2,y2). |